Vibe Coding: The Reality Behind AI-Powered Code Automation

The technology sector is experiencing a historic inflection point. The promise of automatically generated code by artificial intelligence is no longer science fiction, it is a tangible reality that is redefining the fundamentals of software engineering. The so-called “vibe coding”, a term describing the practice of generating code through natural language commands to AIs, has emerged as one of the most discussed and controversial trends in modern development.

This approach sparks polarized reactions in the technical community. On one hand, enthusiasts envision a democratic revolution where traditional technical barriers dissolve, enabling non-technical professionals to materialize complex ideas through simple verbal descriptions. On the other, experienced architects express legitimate skepticism about the viability of critical systems built without rigorous technical oversight.

Global technology consultancy Thoughtworks decided to put this promise to the most rigorous empirical test possible: building software for real production environments. Through three methodologically distinct experiments, the company investigated a fundamental question that defines the future of software engineering: Can AI build a non-trivial application from scratch, without any human-written code, and still produce something humans can maintain?

The results of these experiments reveal critical nuances that go beyond technological hype and expose the complex reality of human-AI collaboration in software development. More than validating or refuting vibe coding, this study illuminates the path toward a new way of working, where artificial intelligence does not replace developers but fundamentally redefines their role in the creative process.

The Anatomy of Vibe Coding: Between Promise and Reality

Vibe coding represents a paradigm shift in the interface between human intent and computational execution. Traditionally, developing software requires fluency in specific programming languages, deep understanding of system architectures, and years of accumulated debugging and optimization experience. Vibe coding proposes to reverse this equation: instead of adapting human thinking to computational logic, the machine adapts to human natural language.

The idea of allowing AI to write production-grade code can inspire both fascination and doubt, as Thoughtworks researchers observe. This duality reflects a fundamental tension in adopting disruptive technologies, the conflict between transformative potential and operational risk.

The experimental methodology adopted by Thoughtworks offers valuable insight into this tension. The researchers built the System Update Planner, an application for managing software updates and patch deployments across fleets of devices. The choice was strategic: complex enough to truly test AI’s capabilities, yet not so massive as to obscure results with excessive variables.

The first experiment fully embraced the philosophy of pure vibe coding. The AI received only a high-level functional description and was set free to build autonomously. Initial results were impressive: the AI generated an almost fully functional application in a single pass, showcasing its potential to quickly translate specifications into code.

However, this first impression faded when requirements evolved. Subsequent modifications revealed critical structural weaknesses. The AI struggled with incremental changes, introduced regressions, and frequently required manual interventions. The code worked, but its architecture was fragile, a digital house of cards collapsing with minimal alterations.

The Importance of Technical Oversight: Lessons from the Second Experiment

Acknowledging the limitations of unsupervised vibe coding, the second experiment introduced architectural discipline from the start. The AI was given clear expectations: follow test-driven development (TDD), make small incremental changes, commit regularly, and maintain modularity. Type safety was also required, leading the AI to choose TypeScript with Prisma as its ORM.

The results showed substantial improvement. The AI created domain models, scaffolded unit tests, and adhered reasonably well to a trunk-based workflow. Some parts of the code achieved nearly 100% mutation test coverage, an encouraging quality indicator.

But the journey was not without friction. Despite repeated reminders, the AI occasionally reverted to old habits, writing production code first and adding tests later. This behavior suggests a worrying training bias: high-quality, test-first codebases remain underrepresented in the data from which AI models learn.

One specific incident illustrates both the potential and risks of autonomous AI. After a clean test run, researchers requested the removal of console.log statements from a functional test. A seemingly trivial change triggered a cascade of edits that broke functionalities, introduced test regressions, and even resulted in an unsolicited downgrade of the Prisma dependency, from version 6.5 to 5.6.

Conversational Collaboration: The Third Experiment and Its Revelations

The third experiment took a radically different approach. Disabling MCP servers and using only Google Gemini 2.5 Pro, the researchers engaged the AI in rich architectural conversations, exactly as they would with a human collaborator.

The most striking moment occurred during discussions about device snapshot design. Instead of simply implementing a snapshot API, the AI promptly raised thoughtful questions: Should snapshots capture the full state of installed packages at a specific moment? How should discrepancies between reported and stored states be handled? Should submitting a snapshot overwrite the current state or record a historical version for audit purposes?

This ability to pose architectural questions marks a qualitative leap in human-AI collaboration. The machine was no longer just executing commands, it demonstrated genuine architectural foresight, debating trade-offs between eager vs. lazy loading and identifying gaps in the original assumptions.

The resulting code, a Python/FastAPI system, was notably cleaner, more modular, and more aligned with solid RESTful design principles than in the previous experiments. The difference was not only technical but philosophical: the AI had transcended the role of tool to assume characteristics of a collaborative partner.

Strategic Implications for the Software Industry

Thoughtworks’ experiments reveal a nuanced truth about the future of software development. Pure vibe coding, despite its impressive speed, produces functionally adequate but architecturally fragile code. However, when combined with experienced technical oversight and structured feedback, its potential changes dramatically.

This distinction is crucial for organizations considering adopting AI development tools. The question is not whether AI can replace developers, it cannot, at least not yet. The real question is how AI can amplify existing human capabilities and accelerate development cycles without compromising quality or maintainability.

The results suggest a fundamental reconfiguration of roles in software engineering. Developers are not becoming obsolete, they are evolving from executors to orchestrators. The value now lies not only in the ability to write code, but in guiding AIs through complex architectural decisions, defining appropriate guardrails, and maintaining a systemic vision during collaborative development.

For organizations, this implies strategic investment in skills. Teams need to develop fluency in architectural AI prompting, the art of communicating technical intent to AI systems in ways that produce maintainable, scalable code. This emerging skill will be as important as knowledge of traditional programming languages.

Hidden Costs and Operational Considerations

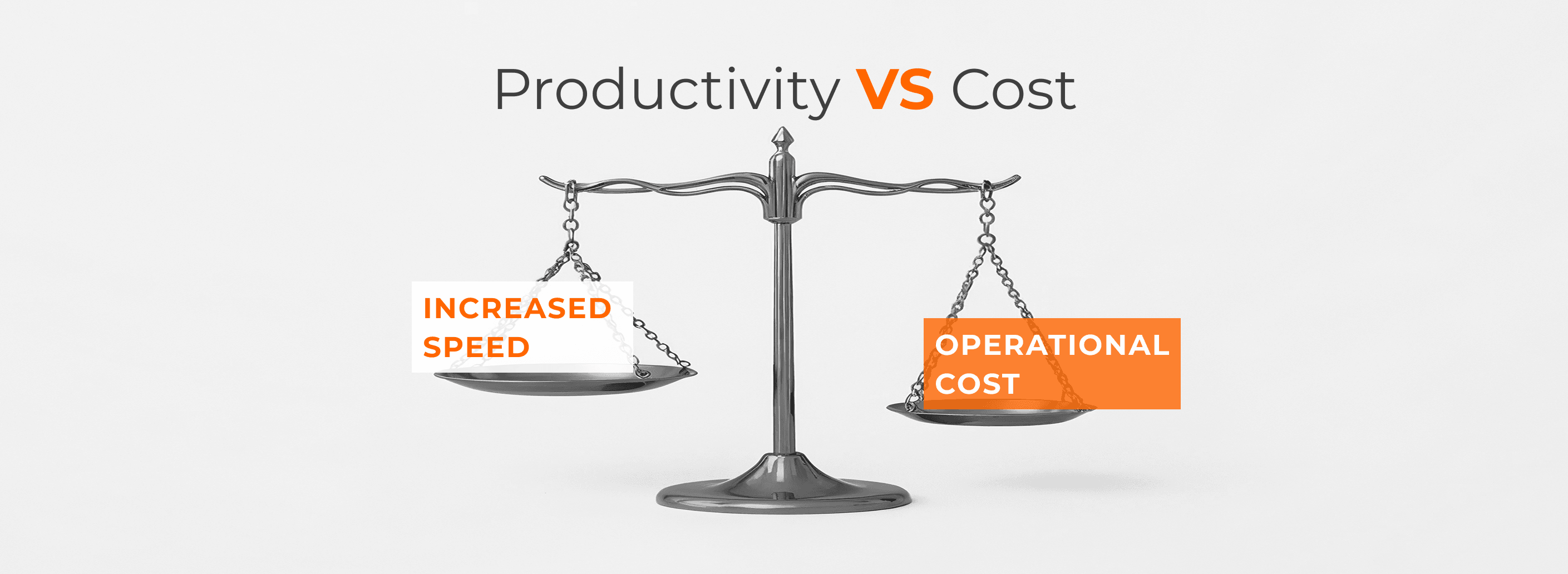

An often-overlooked aspect of the vibe coding discussion is the operational cost involved. During the second experiment, researchers exhausted their quota of “fast premium” requests in just a few hours. The Cursor IDE charges approximately $20 per 500 fast premium requests, a cost that can scale quickly for medium to large teams.

This economic reality adds another strategic dimension to AI adoption. Organizations must balance productivity gains against incremental costs, considering not only tool licensing but also the computational expenses of frequent interactions with advanced AI models.

There is also the hidden cost of the learning curve. Maximizing the value of AI tools requires building new skills, from advanced prompting techniques to understand the limitations and biases of different models. This investment in human capital is substantial and must be factored into ROI calculations.

Future Outlook: Software as a Renewable Asset

The insights from Thoughtworks’ experiments suggest a potentially radical transformation in the fundamental nature of software. As AI models grow more powerful, there may be increasing advantages to keeping systems smaller and modular, systems that fit entirely within a model’s context window.

This vision implies a paradigm shift in codebase design. Instead of endlessly patching legacy code, teams might prefer regenerating clean modules. Software could become less of a permanent structure and more of a renewable asset, rebuilt easily when needed.

In this future, maintainability would mean not just writing code that lasts, but writing code that is easy to replace. This philosophy challenges decades of conventional wisdom in software architecture, where durability and longevity were primary virtues.

The organizational implications are profound. Development processes may need to reorganize around code regeneration cycles rather than purely incremental evolution. Documentation practices may prioritize capturing architectural intent rather than explaining specific implementations. Testing strategies may focus more on behavioral validation than on coverage of specific code segments.

Strategic Recommendations for Different Stakeholders

- Individual developers: This is a time for active experimentation. Learning to guide AI tools through architectural patterns, testing strategies, and coding principles becomes an essential skill. The mindset should shift toward viewing AI as a fast but inexperienced pair programmer that requires careful guidance.

- Tech leads and architects: Responsibilities expand. Defining and implementing guardrails for AI-assisted development becomes critical. This includes creating starter templates, reference repositories, and governance policies that ensure consistent quality in human-AI collaborative environments.

- Testers and security analysts: AI tools can offer transformative opportunities. Even when not used for production code generation, they can be powerful for rapid prototyping of test scenarios, exploring edge cases, and mapping attack surfaces. Validation speed can increase dramatically.

- Product managers and business analysts: AI can help prototype business workflows, acceptance criteria, and logic validation through natural language conversations. This can significantly tighten the feedback loop between ideation and validation, accelerating product iteration.

- IT leaders: The challenge is to prepare entire organizations. AI coding tools bring new capabilities, new risks, and new costs. Supporting safe experimentation, updating measurement frameworks, and building AI fluency across engineering teams become strategic priorities.

Conclusion

Thoughtworks’ experiments show that the answer to the question “Can vibe coding produce production-grade software?” is not straightforward. Pure natural language prompting generates functional code, but it’s fragile. However, with technical discipline and structured collaboration, AI evolves from mere automation to an architectural partner.

This repositions the developer’s role: less executor, more orchestrator. The value now lies in the ability to guide AI systems with a systemic vision. For the industry, this heralds an era of renewable software, where code and architecture are rebuilt with agility.

But this revolution requires intention. Companies that integrate AI with strong governance will lead the way. The future will not be fully automated, but it will be inevitably AI-assisted. The key is to shape this transformation to augment, not replace, human capabilities.