Is Readable Code Becoming Obsolete? The Silent AI Revolution in Software Development

For decades, code readability has been one of the core pillars of software engineering. Methodologies like Clean Code, SOLID principles, and peer code reviews have shaped generations of developers around a seemingly unquestionable premise: code should be written for humans to read. Robert Martin popularized the maxim that "code is read ten times more than it is written," establishing a paradigm that guided the industry for years.

However, a silent transformation is underway in tech labs around the world. Artificial Intelligence systems are not only assisting in code generation, they are beginning to produce solutions optimized exclusively for machine processing. This fundamental shift raises a provocative question: are we witnessing the end of the readable code era?

The answer isn’t purely technical, it’s philosophical. It touches the very nature of the human-machine relationship in the creative process, challenging assumptions that have underpinned decades of software development. When AI systems produce functionally superior yet humanly incomprehensible code, we face a dilemma that transcends syntax and architecture.

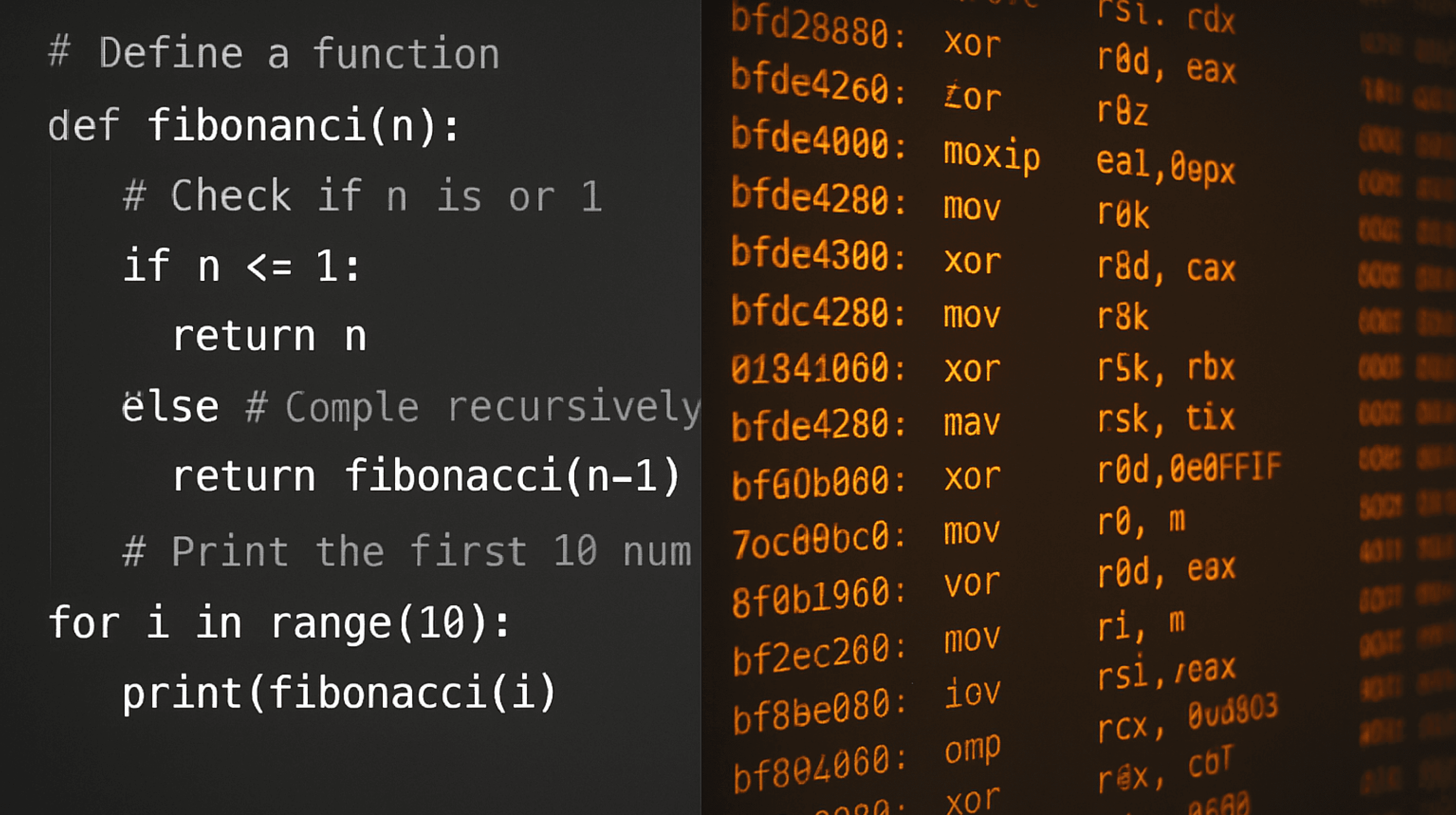

The Emergence of Machine-Oriented Code

The transformation is unfolding before our eyes. Microsoft CTO Kevin Scott projects that by 2030, 95% of all code will be generated by AI. But this quantitative shift masks a deeper qualitative one. Code produced by AI systems often has radically different structural characteristics than that written by human developers, frequently employing optimization patterns that are mathematically elegant but practically unreadable to conventional programmers.

Observations from development labs show that when AI systems are free to optimize code without readability constraints, they generate solutions that explore computational patterns far beyond traditional human cognition. The result? Systems that are functionally superior in performance and efficiency, but structurally unintelligible to human analysis.

This evolution is not an anomaly, it is a natural consequence of computational optimization. When AI systems prioritize algorithmic efficiency over human readability, solutions emerge that systematically exploit complex mathematical relationships. Real-world examples can already be seen in modern compilers that use machine learning for code optimization, producing assembly code that, while highly efficient, challenges even seasoned engineers’ understanding.

The Black Box Paradox

The proliferation of opaque algorithmic solutions introduces a fundamental paradox in modern software engineering. On the one hand, we gain systems that are demonstrably superior in performance, energy efficiency, and processing power. On the other, we lose the ability to understand the internal mechanisms behind those improvements.

This phenomenon is already observable across multiple contexts. Machine learning models exhibit emergent behaviors that even their creators cannot fully explain. AI-optimized compilers generate flawless assembly code whose logic defies conventional engineering explanations. Tools like GitHub Copilot sometimes produce working solutions that challenge our understanding of why they work.

The central question is not whether these systems work, they clearly do, often outperforming conventional alternatives. The real question is whether we’re willing to accept systems whose inner workings are inaccessible to direct human comprehension.

Implications for Auditing and Quality Control

The transition to unreadable code presents significant challenges to established quality assurance processes. Traditional code reviews assume that experienced developers can detect structural issues, security vulnerabilities, and questionable architectural decisions by reading the source code.

When the code becomes difficult or impossible for humans to understand, these practices lose effectiveness. How do we detect algorithmic bias in systems whose decision-making is opaque? How do we identify security flaws in code that operates via non-conventional logic? How do we ensure regulatory compliance in systems that cannot be audited through traditional means?

The answer may lie in evolving our auditing methods. Instead of reviewing specific implementations, we may need techniques that assess systemic behavior through comprehensive automated testing, output pattern analysis, and formal verification of mathematical properties.

Redefining Clean Code in the AI Era

Technological evolution suggests a fundamental reinterpretation of what "clean code" means. Instead of focusing on syntactic elegance and structural clarity, clean code may evolve to emphasize precise goal specification, comprehensive documentation of expected behaviors, and rigorous definition of interfaces.

In this new paradigm, code quality would not be measured by readability, but by the clarity of requirements and the robustness of result validation. The focus would shift from implementation to specification, from the how to the what.

This transformation doesn’t eliminate the need for technical rigor, it redirects it. Documentation becomes critical not to explain the code, but to define behavior. Automated testing becomes essential not to validate logic, but to verify conformity to specifications.

The Future of Human Participation in Development

The most profound question raised by this evolution is not technical, it is existential: what will be the role of human developers in a world where AI produces superior and incomprehensible code?

One possibility is the evolution of developers into specification architects, professionals specialized in translating business requirements into precise technical specifications that AI can implement. Another is the specialization in validation and testing of automated systems, developing sophisticated methodologies to verify behaviors without necessarily understanding the underlying implementation.

Alternatively, we may see a bifurcation in the industry: critical systems may retain human-readable and auditable code, while high-performance applications adopt fully automated, opaque solutions.

Ethical and Societal Considerations

The shift toward unreadable code raises significant ethical questions about algorithmic transparency and technological responsibility. In domains like healthcare, finance, and criminal justice, where automated decisions directly impact human lives, algorithmic opacity may be unacceptable regardless of performance benefits. Emerging regulations like the European AI Act are beginning to require algorithmic explainability in critical applications. This regulatory trend could create a division between “explainable” code for regulated environments and “optimized” code for less sensitive contexts.

The issue of responsibility also becomes more complex when autonomous systems produce code that no human fully understands. Who is accountable for failures in systems whose inner workings are opaque even to their creators?

Conclusion

The obsolescence of readable code is not just a technical evolution, it is a fundamental transformation in the relationship between humans and computational systems. We are transitioning from an era where machines executed clearly specified human instructions to one where machines create solutions that humans cannot fully comprehend.

This shift is not inherently negative. AI systems can produce more efficient, secure, and robust solutions than those built by human programmers. The challenge lies in developing new frameworks for governance, auditing, and control that maintain appropriate human oversight without limiting technological potential.

The future will likely not be marked by the complete abandonment of readable code, but by the coexistence of multiple development paradigms. Critical systems may retain transparency requirements, while high-performance applications push the boundaries of automation.

The real question is not whether readable code will become obsolete, but how we adapt our practices, regulations, and expectations to a reality where full human comprehension of computational systems may no longer be feasible, or even necessary.

In this new paradigm, the value of human developers lies not in writing elegant code, but in the ability to precisely specify problems, rigorously validate solutions, and ethically oversee automated systems. The code may become unreadable, but human responsibility remains indispensable.